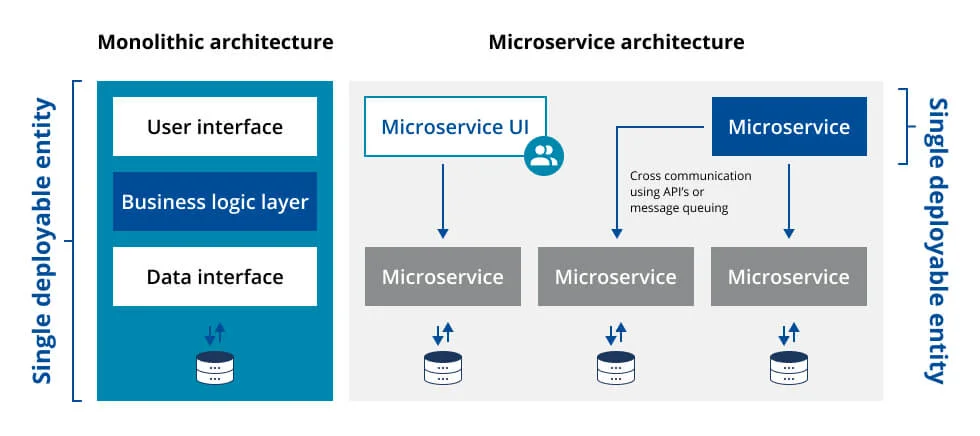

What’s not to like about microservices (and microservices load testing)? Organizing application services into a set of semantically related resources represented as REST URLs makes sense, both regarding development and usage (See Figure 1). And, making it so that a single two-pizza team is completely responsible for every aspect of the microservice – from design and implementation to deployment, testing, and maintenance – creates companies that can turn on a dime. That’s the good news.

Microservice architecture is discrete, flexible and well suited for development by small teams – Microservices Load Testing

The bad news is that when it comes to planning to load test microservices, we make mistakes. I know. I’ve made my share. The following are the top 5 mistakes I’ve made microservices load testing along with the lessons I’ve learned as a result. Hopefully the lessons I’ve Iearned will help you avoid making the mistakes I made.

1. Don’t go chasing 100% testing all the time, pick the high-risk services

Automated testing has become an important part of the Continuous Delivery/Continuous Integration (CI/CD) process. Load testing is particularly important for microservices because of the need to execute fast at scale. However, long running load tests can cause a bottleneck in the CI/CD process if performed too early in the software development lifecycle. So we have a paradox: Test too much too early and we slow the development process down. Test too late and we run the risk of paying the increased expense of fixing code late in the development cycle. What’s the solution?

The trick is to test high-risk microservices at the start of the development cycle and leave less risky services for later. High-risk microservices are the low hanging fruit worthy of taking the time to load test. If a problem is discovered, the money saved fixing the problem early on outweighs the cost of the time it takes to run the test. It’s a net gain. Time intensive load testing on low-risk microservices can take place later on in the software development lifecycle.

2. Don’t waste time waiting around for fully functional dependencies, use service virtualization

Dependencies can be the bane of software development. One team has the code ready to go but is held back from releasing it because another team has yet to deliver a dependency. This is a mistake. Code that’s ready to go should go.

The way to address this type of delay is to use service virtualization. You can think of service virtualization as mocking out a service. You create a microservice that has the URLs you require. These URLs accept data according to the microservice specification and respond according to specification. However, the response is very limited in scope. For example, when you mock the endpoint, /customers/{id}, the response will always be:

{

“id”: 123,

“first_name”: “John”,

“last_name”: “Doe”

}

WHERE 123 is the id of the customer

Although the behavior is limited, it does serve the purpose required: to provide the consumable functionality of a dependency that is still under development.

There are some microservices load testing tools out there that make microservice virtualization an easy undertaking. Swagger Editor and Restito are two of many. You use these tools to specify the URLs that make up the microservice. Then behind the scenes, the tool implements the limited REST functionality required, according to the specification.

3. Don’t guess, test to a service level agreement

Testing to a service level agreement might seem like an obvious thing to do. But, many companies are organized in such a way that testing personnel and product management never know about each other, let alone interact in an informative way. This is very un-Agile, and it’s a mistake. Testers waste valuable resources imagining the operational conditions to meet. Product manager ends up being frustrated when the expectation is not met.

The easier way to avoid this problem altogether is to put products and testing into a room — real or virtual — and come up with a Service Level Agreement (SLA) by which product management expectations are well known and upon which good load tests can be designed and implemented. The SLA does not need to be written in stone and never change, but if new needs evolve and become time-consuming beyond the established SLA, manage and communicate the changes needed. The important thing is that the document provides the concrete, common reference that sets the standards by which testing will be conducted, and products will be accepted.

4. Don’t pin all your hopes on one runtime environment, use many

True story: A while back I was working on a microservice project that was deployed on one of the Big 3 Service providers (AWS/Azure/GC, I leave it to you to guess). We dedicated our deployment to a Midwest US data center. During load testing, we discovered that the microservice took too long to execute. We couldn’t figure out why. The code ran great in-house. So we did a no-brainer. We deployed the code to another region in Asia. Result? Everything was fine.

Did Asia have a better data center than Midwest US? We couldn’t say. But we had the numbers to prove there was a difference.

So what’s the takeaway? Dedicating our deployment to one region was a mistake. We learned our lesson. Moving forward we ran our load tests in a variety of regions, particularly when we were testing for regression.

5. Don’t assume request/response is all there is to measure

While it’s true that load testing the performance of the requests and responses associated with a microservice is important, there’s more to be considered. Behind the scenes there can be a lot more activity that needs to be observed and measured – reading and writing to message queues and memory caches for example. Thus, when implementing microservices load testing, it’s important to have monitors in place to observe the behavior of all the components associated with the microservice. There are reasons for slow responses from a microservice, and they need to be known. Typically a good Application Performance Management or API Management solution provides the system monitors required to get the information associated with a poorly performing load test.

Also, keep in mind that for full stack applications, a microservice that performs well under direct load test might have trouble when it comes to User Experience (UX) testing. For example, a small alteration in a data structure might have unintentional side effects in UI behavior. For those companies that publish microservices as components of a larger application, testing performance at the UI level will have benefits.

The important thing to remember is that many times, to get a good performance profile, online load testing needs to go beyond simply measuring response times for REST calls to a single microservice.

Putting it all together

There’s more to microservices load testing than taxing the request capacity of a set of URLs. An efficient load test plan will use service virtualization to get more code under test faster. Also an efficient load test will identify the high-risk services to test early in the development cycle and leave more time-consuming tests for later on. Testers and product managers will agree upon a common Service Level Agreement that is the basis for load testing analysis. Tests will be conducted in a variety of runtime environments. Lastly, a good test plan will use Application Performance Management/API Management solutions in conjunction with comprehensive load testing tools and load testing software to go beyond simply measuring the response time of a microservice’s URLs.

Avoiding the five mistakes I’ve made above will go a long way to creating effective load tests that measure a broad scope of microservice behavior. Broad, comprehensive load testing implemented throughout the software development lifecycle is critical for making quality microservices that perform at scale.

More about microservices load testing?

Discover more load testing and performance testing content on the Resources pages, or request a trial of the latest version of NeoLoad and start testing today.