Why we bet on Anthropic, part 2: Comparing different computer using agents for testing

This blog brings to light some of the why behind why we chose Anthropic’s Computer Using Agent, and what makes our agentic AI so powerful.

In the prior blog, we previewed some of the reasons that we settled on using Anthropic’s Computer Using Agent (CUA) over other alternatives and promised to provide more information as to what and why, with facts and figures. If you haven’t read that blog, check it out here.

In this blog, I hope we can bring to light some of the “why” behind our decisions, and what makes our agentic AI so powerful.

Different techniques for computer using agents

To start with, let’s outline the basics. How does computer use actually work with an AI? Can’t generative AIs just generate text? Well, if we ignore diffusion models, TTS, and other modalities, then that’s sort of true, but it turns out you can do a lot with text.

Language models and tools

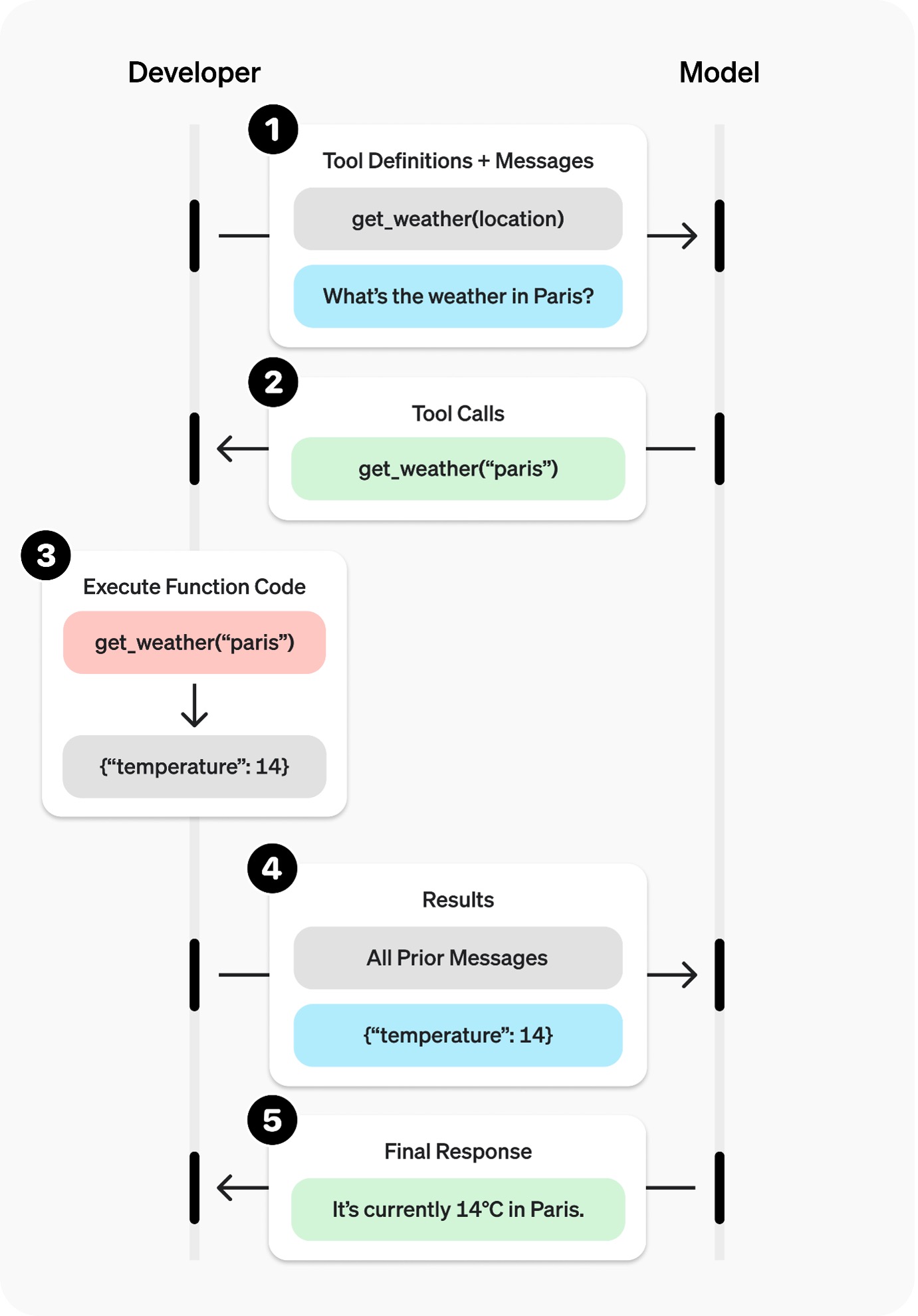

Let’s start with tools. Tools are not new to generative AI, the first tool using models came out in May 2023, with OpenAI following swiftly in June of the same year. But what is tool use? There is a great guide here if you want details, but for the sake of brevity I will summarize.

Tool use is giving the AI an alternative way to respond. Instead of coming back with an answer, it can ask a question, but only from a list of available methods you give it. These “methods” (functions/tools) are APIs for AI. It is a way to provide the AI with the capability to reach out and get real time, up-to-date factual information that it can use to resolve the query. Here is a good example from OpenAI:

Figure 1: https://platform.openai.com/docs/guides/function-calling

In our application, the “reaching out” is giving the AI the ability to get the status of the app, see what’s on the screen, or take action based on what it sees. Reaching out to the human for help is also a tool, it’s just that you are on the other end of that API call as a biological servant to the new AI overlord. We’ll call that “Human in the loop” just to keep the populace calm.

Seeing vs. reading: Dropping the cognitive load

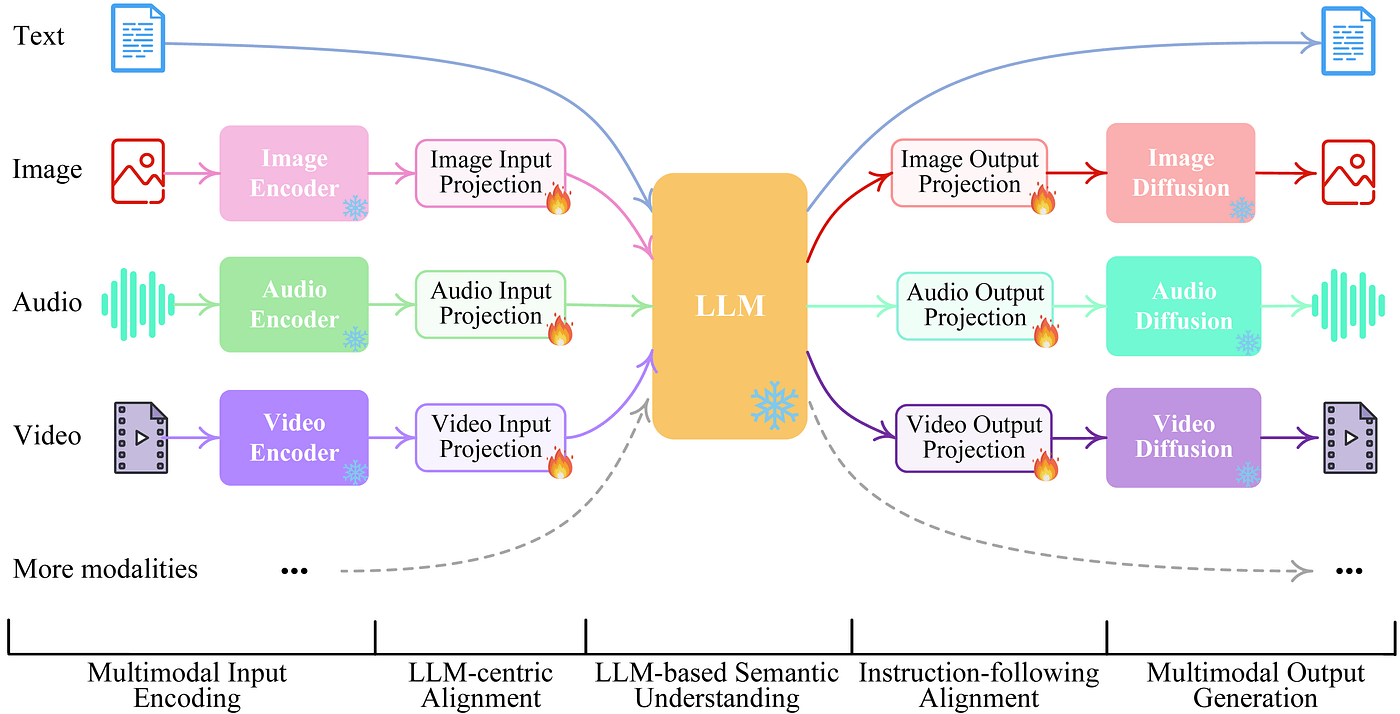

Having established the basics of tool use, let’s jump into the second capability we need, multimodality. When we say a model is multimodal (try saying that 10 times fast …), we often mean it accepts multiple forms of input (modes) but outputs a single mode (text). Many models’ multimodality means models modes morph moderated by mission (ok I’m done now …). Translated into English: You can have different input modes (prompts), and different output modes (responses). Here is a great diagram showing this:

The modes we care about most here are Vision and Text.

Why is Vision so important?

LLMs speak text, so why can’t we just feed them the HTML of an application, and have them determine the best action to take? The LLM knows how to code, right? It’s true that if you give a HTML snippet to ChatGPT and ask what’s going on, it will do a great job of telling you. But take it a level deeper, and we find out that AI is very much like us – there is such a thing as too much cognitive load. In the AI world, these are called “Long horizon tasks,” where there are multiple conceptual steps to perform to get to the answer.

Let’s outline this long horizon for the task of taking in a HTML page, and spitting out actions:

- Take prompt from human (“Fill out this form with … details”)

- Describe the HTML with relation to the task

- Isolate the element you want to act on

a. Map it to some input required by the human input

b. Determine based on structure if this element is the correct one - Determine if the action is possible

a. Is that element visible?

b. Is it disabled?

c. … - Call a tool that does the action

Most of the cognitive load in this scenario is asking the AI to act like a browser rendering engine, figuring out the relation of elements to each other, visibility, etc. Sometimes, depending on how large the context is, this task may be impossible. Here is the key fact though – we don’t work that way as humans. If you wanted to find an element to act on in Chrome, most of us would just visually locate it – and not spend too much time or energy thinking about our actions. By using vision models, we can do much the same thing with AI, finding something without the added cognitive load. In the prior blog, we went into detail about how AI can see, so I won’t repeat that here! But one key point to reiterate here is that multimodal AIs are trained to understand what’s present in an image, making the cognitive load chain much shorter than other AIs.

The impact of training

This leads us to the final point: how AI models are trained is crucial to their success for a particular use case. Language models are few-shot learners,. That proved prescient in most cases, but only when the basic competency was already there.

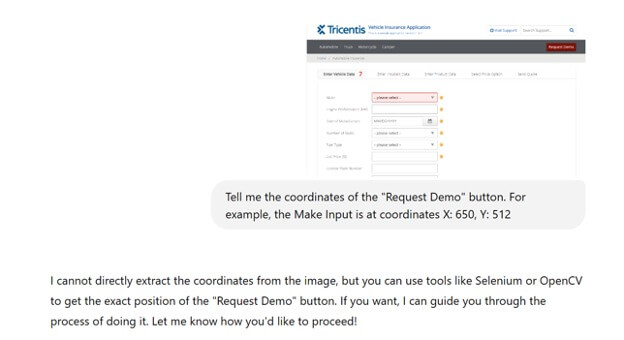

Take this example: we gave the same prompt to GPT4o and Claude 3.7 Sonnet.

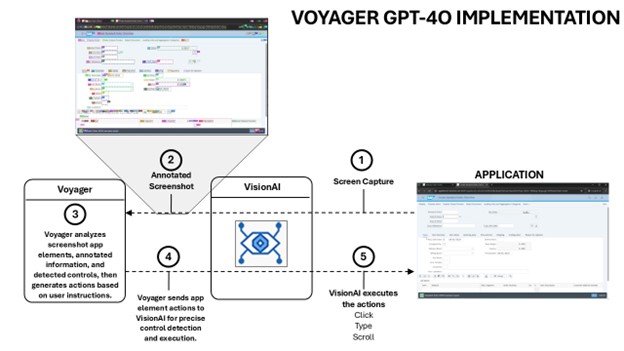

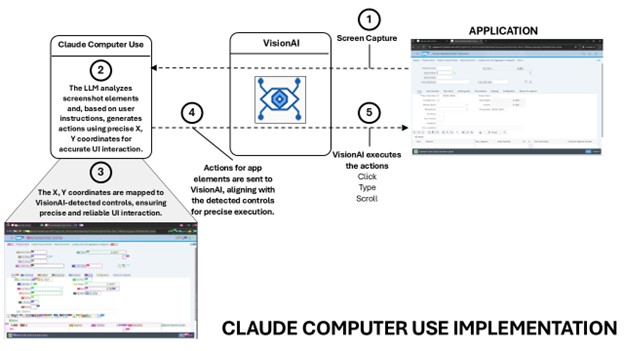

Figure 2 GPT4o

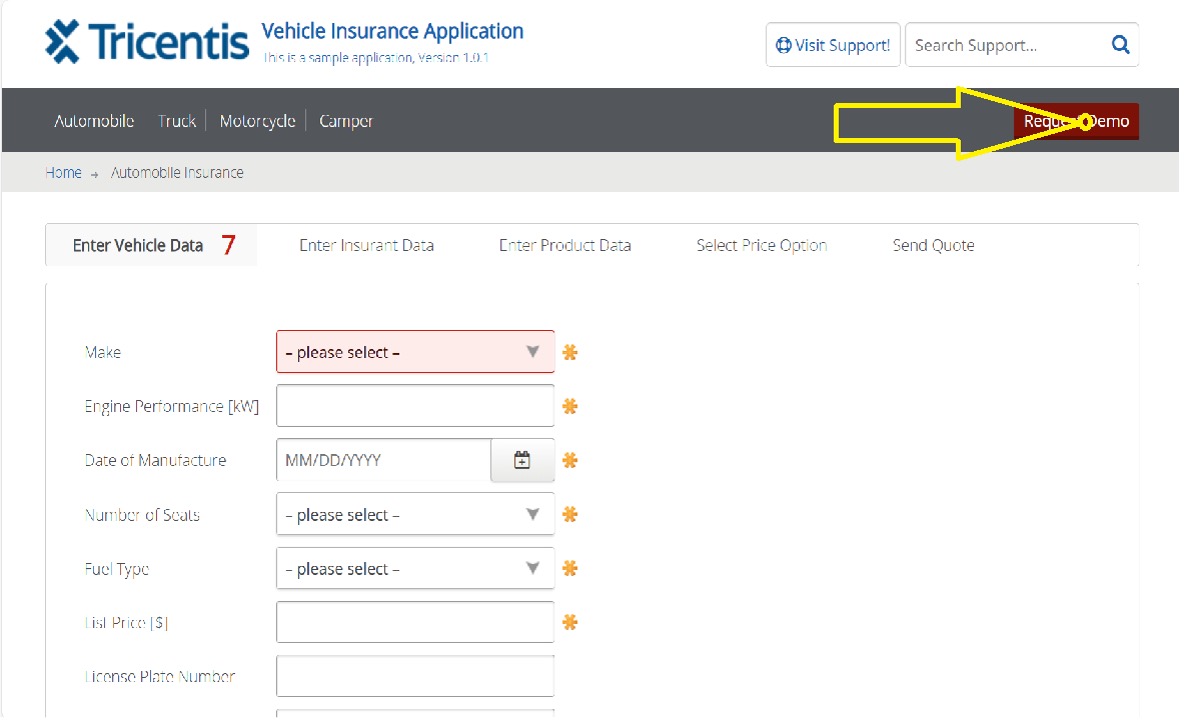

Figure 3 Claude

The Claude model has been trained on how to precisely locate actions within an image, whereas the GPT model has not. No amount of prompting or examples will be able to overcome that deficiency, as it’s an inherent, trained trait of the Claude model.

Different tools in the market

Closed tools (Operator, Google Mariner)

Until very recently, the OpenAI Operator model had no API, which was a barrier for us to rolling up our metaphorical sleeves and figuring out what value it provides. Closed models don’t allow you to plug in your own capabilities, rendering them mostly useless as enterprise tools. No one wants to have their tests run on OpenAI’s machine in their cloud.

Open tools (Microsoft OmniParser/OmniTool)

OmniParser is a system for identifying interactive controls or regions on a computer interface. It uses a combination of Optical Character Recognition (OCR) and Control Detection neural networks to pick controls that would be useful for a computer use agent. This improves on techniques used by competing solutions, as only the most appropriate controls are output, removing unnecessary controls and regions that can confuse the LLM predictions for next action.

The networks employed are YOLOv8 for control/icon detection and EasyOCR or PaddleOCR for text recognition. The outputs are combined to generate control regions with text content. Any controls without text content (e.g., icons, images, empty controls) are sent to the Florence2 classification network to predict the type of control – this prediction is used as the “content” for those controls.

OmniParser is the backend to OmniTool. OmniTool takes the predictions from OmniParser, and feeds these to a LLM to determine the action to take on the current screen. Multiple LLMs are supported, including OpenAI (4o/o1/o3-mini), DeepSeek (R1), Qwen (2.5VL), and Anthropic (Claude 3.5v2).

OmniTool works similarly to existing agentic navigation agents, but performance is improved by annotating the screenshot with the more ideal set of controls from OmniParser. Competing solutions, such as web agents, typically parse the DOM for control regions, and this results in excess and sometimes hidden controls being presented to the LLM.

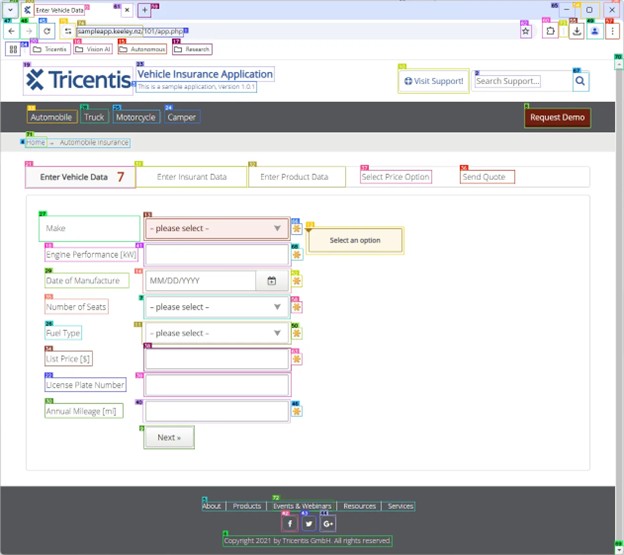

The information sent to the LLM in the prompt includes the raw screenshot, an annotated screenshot, and a list of controls matching the annotations.

The OmniParser annotation data sent to the LLM only includes the ID, Control Type (text/icon), and Content:

ID 0: Text: Enter Vehicle DataID 1: Text: sampleapp.keeley.nz/101/app.phpID 2: Text: Search Support....ID 3: Text: This is a sample application, Version 1.0.1eID 4: Text: Home + Automobile Insurance.ID 5: Text: About ProductsEvents & WebinarsResources ServicesID 6: Text: Copyright 2021 by Tricentis GmbH. All rights reserved.ID 7: Icon: please select -ID 8: Icon: Request DemoID 9: Icon: Next >ID 10: Icon: @ Visit Support!ID 11: Icon: please select -ID 12: Icon: Select an optionID 13: Icon: please select -...The only control types are “Text” and “Icon.” Icon represents anything that is not text.

When using Claude 3.5v2, Claude’s native computer-use tool is called to generate actions, which are based on coordinates. In this case, the annotated screenshot is not presented, only the raw screenshot and OmniParser data is sent. We found that as Claude 3.5v2 is excellent at navigation and screen interpretation already, the extra OmniParser data only offers a minor improvement in steering.

For all other LLMs, a longer prompt is used, specifying actions to take on one of the control IDs present in the OmniParser output. This greatly improves performance for agentic control based on these models.

OmniParser accuracy compared to Vision AI

We took a selection of 100 human-labeled screenshots and compared the accuracy of OmniParser output to our own Vision AI detection system.

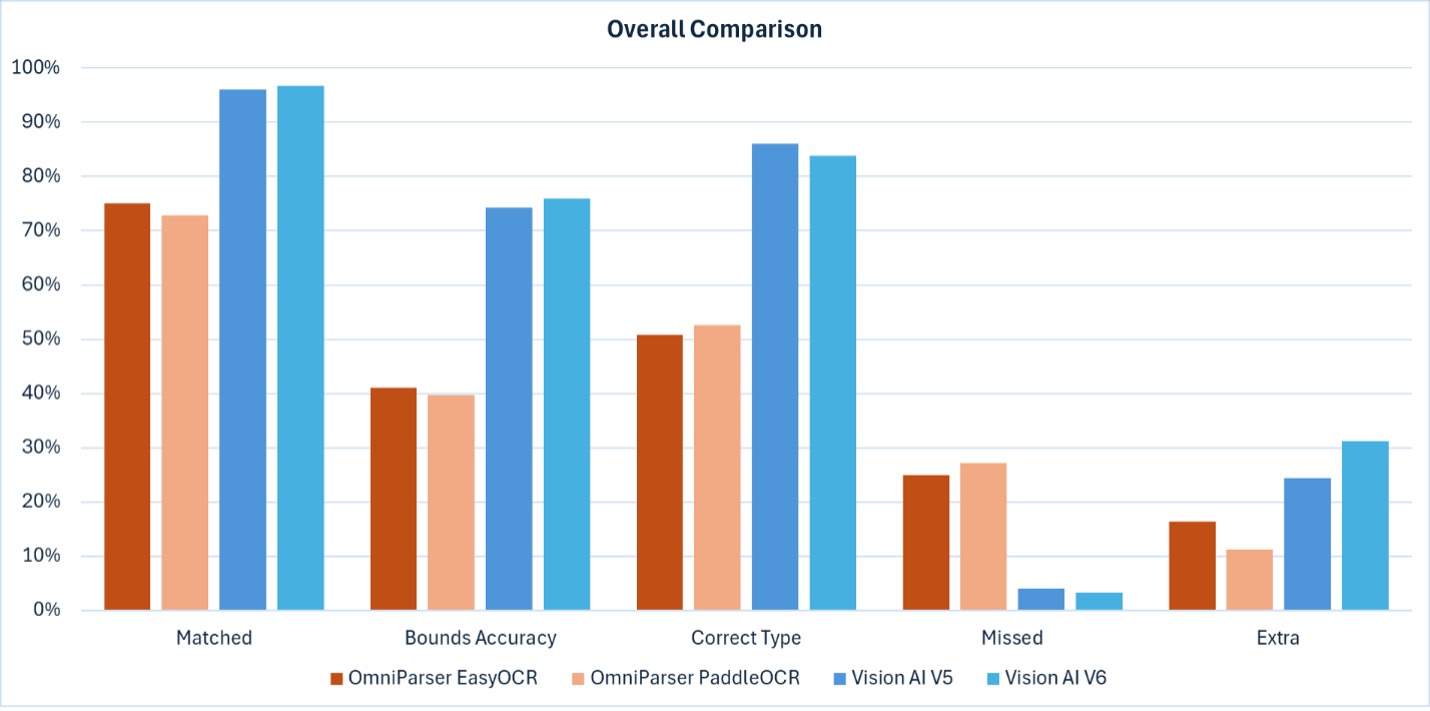

Overall comparison

Here we compare key metrics for OmniParser (orange) versus Vision AI (blue). The two OmniParser variants are EasyOCR and PaddleOCR for text detection. The two Vision AI variants are using our latest Version 5 and Version 6 control detection networks. OCR for Vision AI is based on Azure’s Read API v3.2 for both variants.

- Matched – Percentage of expected controls that were found in the output

- Bounds accuracy – How accurate the bounds of each control matched the expected location

- Correct type – Percentage of controls that matched the expected type of control

- Missed – Percentage of expected controls that were not found in the output

- Extra – Percentage of controls that were in the output but were not in the labelled set

Overall, Vision AI is far superior to OmniParser in detection accuracy.

Typically, OmniParser predictions are larger and/or less detailed than Vision AI predictions. For the use case of LLM Navigation agents, this has the benefit of reduced prompt size (fewer controls), which improves control selection. However, it can lead to loss of fine control over individual parts of an interface.

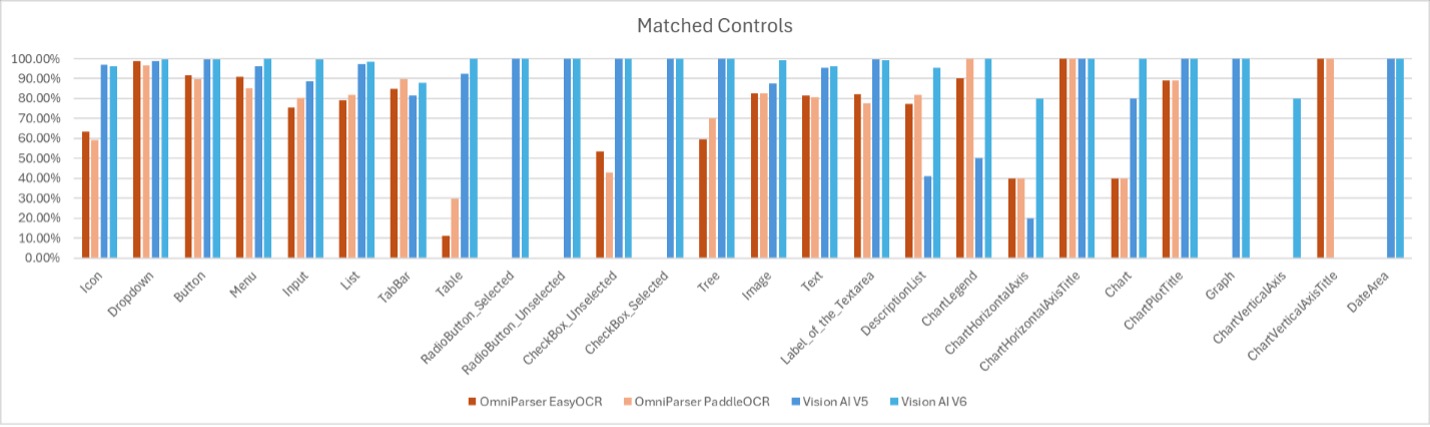

Matches by control type

This chart shows how well the different control types are detected by each network.

- Simple control types are detected well by both networks; Vision AI has a slight advantage.

- More complex controls are detected much better by Vision AI, such as tables, lists, charts, graphs, and trees.

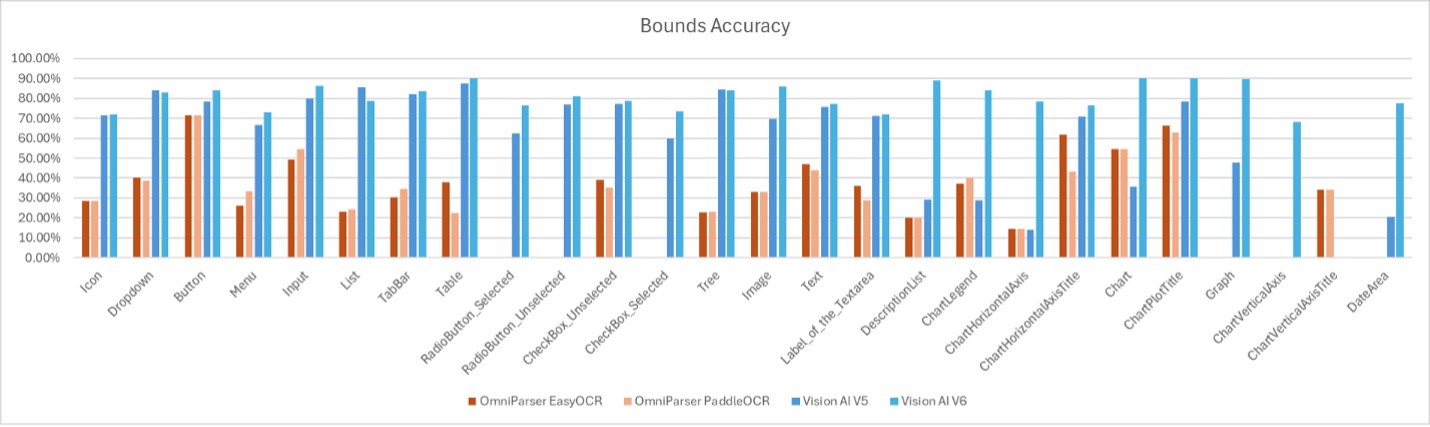

Bounds accuracy by control type

This chart shows the accuracy of the detected bounds for each control type.

- Generally, Vision AI is superior in bounds detection accuracy

- OmniParser bounds are often over-sized

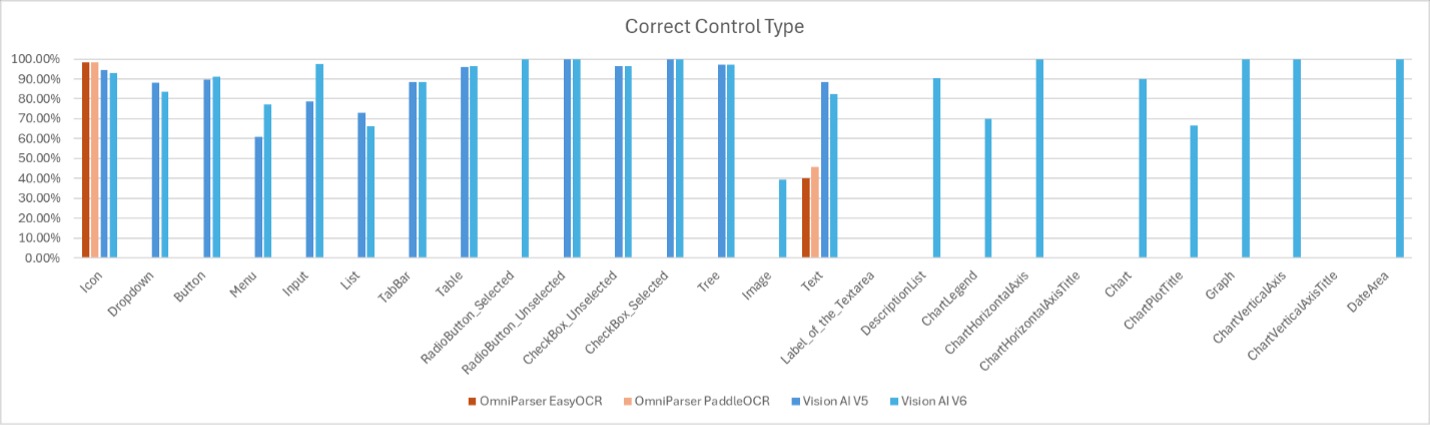

Control type accuracy

This chart shows the accuracy of the detected control type.

- OmniParser only has two control types – Text and Icon (Icon is used for anything that is not Text).

- Vision AI V5 includes basic control types and compound types like List, Tree, Table. Vision AI can also detect the state for some controls like checkboxes and radio buttons.

- Vision AI V6 adds more control types for charts and graphs.

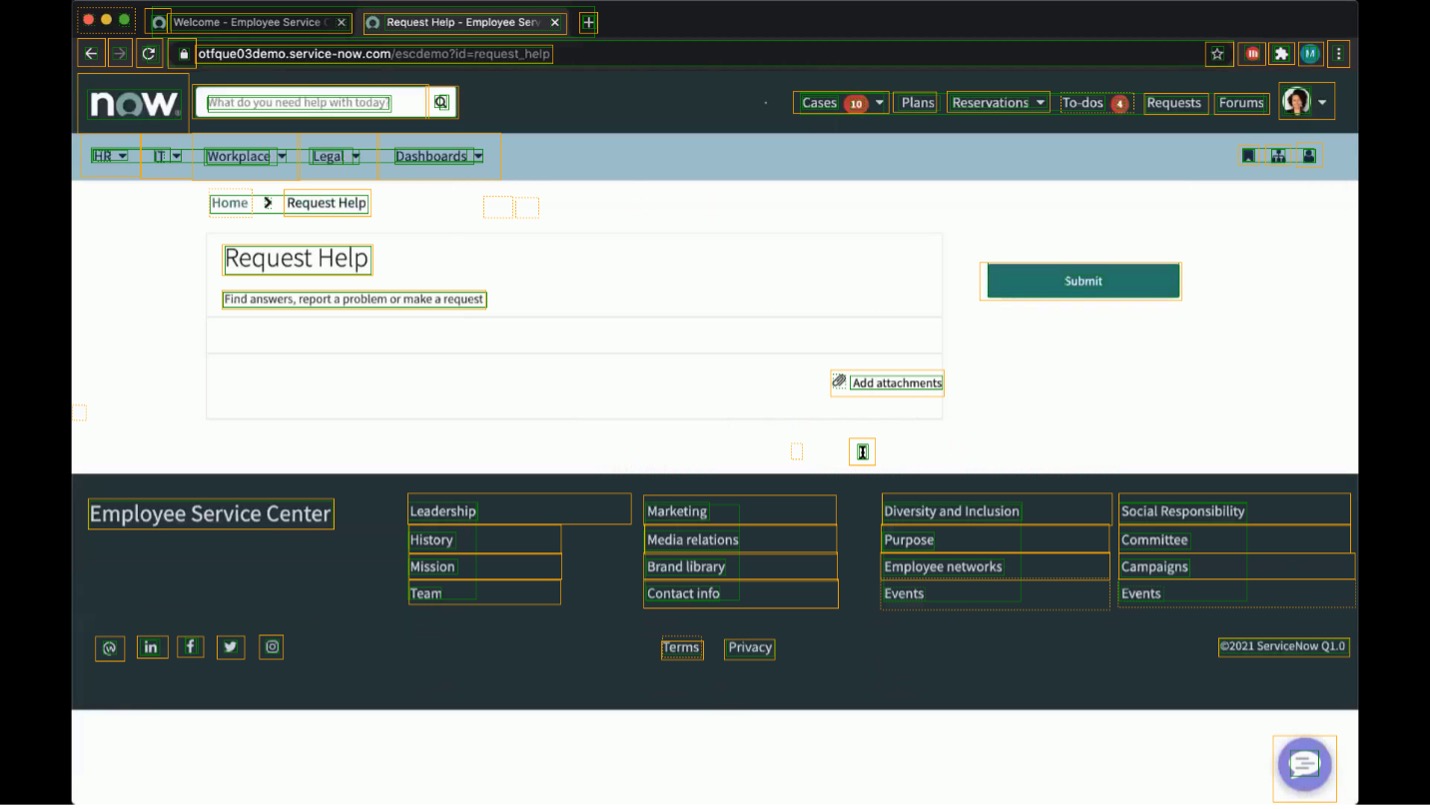

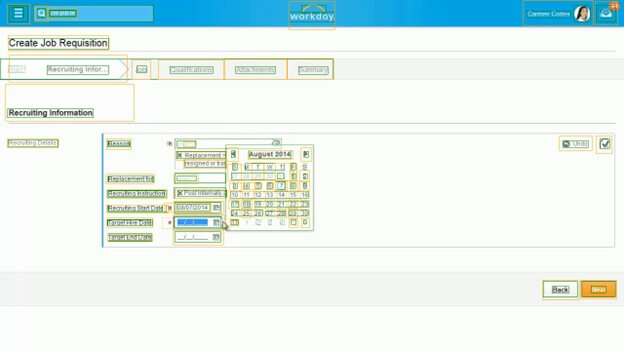

Example of failure modes for OmniParser

Green boxes show expected controls and yellow boxes show OmniParser predictions.

- The text links in the footer have over-sized boundaries, which leads to incorrect automation if clicking in the center of the bounds – the text link would be missed.

- “Add Attachments” is predicted as a single control, instead of separate icon and text controls.

- Complex controls such as lists, trees, and tables are typically not detected.

- The date field predictions cover the whole item. The icon and text input areas are not separated.

- While the simplified predictions reduce the amount of data required for LLM inference, this comes at the expense of inhibiting fine control of the interface

(e.g., the calendar icon may not be able to be clicked directly).

Platforms (Claude Computer Use)

AI-powered computer-using models are designed to interact with digital interfaces autonomously, simulating human-like actions such as clicking, typing, scrolling, and navigating software applications. These models combine vision processing, structured data extraction, and reasoning to understand and execute tasks on computers or web browsers.

What is Claude Computer Use

Claude’s Computer Use is a multimodal capability that enables it to see, understand, and interact with computer interfaces, in a human-like manner. This capability allows Claude to move the cursor, click buttons, and type text, effectively emulating user interactions with software applications.

- Visual interpretation: Claude processes screenshots of the computer screen to understand the current state of the interface. This visual input allows the model to identify various UI elements such as buttons, text fields, and menus.

- Action planning: Based on the visual input and user instructions, Claude determines the necessary actions to achieve the desired outcome. This involves reasoning about which UI elements to interact with and in what sequence.

- Precise interaction: Claude calculates the exact pixel coordinates required to move the cursor and performs actions like clicking or typing.

This combination of visual interpretation, action planning, and precise interaction enables Claude to perform tasks across various software applications.

Claude Computer Use vs. OpenAI Operator vs. OmniParser

Claude’s Computer Use, OpenAI Operator, and OmniParser allow AI to interact with user interfaces, but their approaches differ significantly. Here’s a breakdown of the differences:

Claude’s Computer Use works in a top-down manner:

- Understands the task first – Given an instruction (e.g., “Click the ‘Submit’ button”), Claude first determines which UI element it needs to interact with.

- Detects the relevant UI element – It then scans the screen to locate the button, field, or menu that matches its reasoning.

- Generates an action + coordinates – After identifying the element, Claude calculates its X-Y coordinates and executes the appropriate action (click, type, etc.).

OpenAI’s Operator follows a bottom-up approach when interacting with web interfaces and operates within a custom version of Chrome:

- Extracts UI elements first – Operator retrieves structured webpage data to identify buttons, text fields, links, and other interactive elements.

- Analyzes the UI elements – After extracting these elements, Operator determines which ones are relevant to the given task. It considers attributes like labels, position, and function to filter out unnecessary elements.

- Chooses an action and executes it – Based on its reasoning, Operator selects the most appropriate action (click, type, scroll, etc.). Instead of using pixel coordinates, it interacts with elements directly using structured webpage data within its custom Chrome environment.

- Integrates with partner website APIs – Operator can make API calls to partner websites, retrieving data or executing interactions beyond just UI-based automation.

OmniParser works in a bottom-up approach:

- Detects all interactable UI elements first – It pre-processes the screen and identifies buttons, text fields, and controls before deciding on an action.

- Extracts and filters the best elements – Using OCR and object detection (YOLOv8, PaddleOCR, Florence2), it removes unnecessary or hidden elements.

- Passes the filtered elements to the LLM – Instead of having the AI scan the entire image, OmniParser provides an annotated list of available UI elements.

- LLM selects an action based on pre-detected elements – The AI then determines which element to interact with and how (click, type, etc.).

| Feature | Claude Computer Use | OpenAI Operator | OmniParser |

| Scope | Works across desktop applications and web interfaces | Works exclusively in Chrome for web automation | Works in structured applications where UI elements can be extracted and analyzed |

| Processing order | Decides action first → Finds UI element → Retrieves coordinates | Extracts UI elements first → LLM selects action → Executes | Extracts UI elements first → Filters unnecessary ones → LLM selects action → Executes |

| Environment | Works on any software or web UI | Operates in a custom version of Chrome | Can function in various environments but primarily targets structured UI applications |

| UI element handling | Uses vision-based detection to find elements dynamically | Uses structured webpage data to extract and interact with UI components | Uses OCR + object detection (YOLOv8, PaddleOCR) to detect and filter UI elements |

| LLM dependency | Operates autonomously, without requiring an external LLM | Operates autonomously, without requiring an external LLM | Requires an LLM to determine the action after detecting and filtering UI elements |

| Coordinate system | Returns pixel coordinates for UI interaction | Uses structured webpage data for precise element positioning | Uses OCR-based bounding boxes for positioning detected UI elements |

| Execution method | Simulates human-like mouse and keyboard actions based on element coordinates | Makes API calls with partner websites and performs interactions with web elements directly within Chrome | Passes filtered UI elements to an LLM for reasoning and execution |

| Open/closed source | Closed source | Closed source | Open source |

Best of breed – Combining Claude Computer Use with Vision AI

Integrating Claude Computer Use with Tricentis Vision AI creates a powerful AI-driven test automation and UI interaction system by combining coordinate-based execution with precise control matching. This approach eliminates the need for traditional control identification methods (such as name, ID, or attributes) and instead ensures accurate execution through coordinate-based control matching.

- Traditional test automation often fails when UI elements have duplicate names, dynamic IDs, or missing attributes.

- Claude Computer Use returns exact coordinates for an action.

- Tricentis Vision AI validates the coordinates and matches them to the correct UI control, ensuring precise execution.

- Automatically generates Tricentis Tosca test scripts by combining Claude Computer Use’s coordinate-based execution with Tricentis Vision AI’s control matching, enabling scalable, reliable, and repeatable test automation.

Challenges with Claude Computer Use alone

While Claude Computer Use provided a solid foundation for UI automation, it did not work optimally out of the box for complex enterprise workflows.

Handling complex multi-step controls:

- Table steering issues – Struggled with navigating and interacting with table-based elements, making it difficult to automate workflows involving structured data.

- Inefficient multi-step actions – Issued separate move, click, and type commands instead of a single optimized interaction, leading to redundant actions and longer execution times.

How Vision AI, custom tooling, & guardrails improved performance

By integrating Vision AI, custom tooling, and guardrails, we addressed Claude Computer Use’s limitations in handling complex UI interactions, table steering, and redundant actions. Vision AI provided accurate control detection, while our custom automation guardrails eliminated inefficiencies and improved execution accuracy, making enterprise test automation more reliable and scalable.

Control detection and contextual awareness:

- Vision AI’s AI-powered control detection accurately identified and matched UI elements without relying on selectors or manual tagging.

- Improved table steering, allowing for seamless interaction with complex UI structures.

Eliminating redundant actions:

- Our automation guardrails optimized execution paths, reducing unnecessary intermediate actions (e.g., moving, clicking, and typing separately) into a single, efficient step.

- This significantly improved efficiency, accuracy, and test execution speed.

Automatic Tosca script generation for scalable testing:

- The combined approach automatically generates Tosca test scripts, ensuring:

- Repeatable and scalable automation across different environments.

- More reliable test execution by leveraging AI-driven control validation.

The proof is in the pudding

We tested Voyager v1.2.0 (Current GPT-4o Implementation) and Claude Computer Use to evaluate how well each model handled long-horizon test execution — executing complex, multi-step workflows autonomously for end-to-end Order-to-Cash (O2C) automation while maintaining context, correcting errors, and minimizing human intervention.

This experiment leveraged Claude Computer Use + Tricentis Vision AI, a hybrid AI-driven automation approach, to optimize test execution accuracy and efficiency.

What is Order-to-Cash (O2C)?

Order-to-Cash is a critical business process that covers the entire lifecycle of a customer order — from order creation to payment collection. This includes:

- Sales order creation – Entering customer orders into the system.

- Order processing – Validating, confirming, and preparing orders for fulfillment.

- Shipment & delivery – Coordinating logistics and tracking order status.

- Invoicing & payment – Generating invoices, tracking payments, and closing the transaction.

Given its complexity, O2C automation must handle various system interactions, validate data, and adapt to changing UI conditions — making it an ideal test case for evaluating AI-driven test execution.

Key findings

We found that Claude Computer Use had:

- Better efficiency – It was 15% more efficient, meaning fewer redundant actions.

- Higher accuracy in function execution – It was 11% more accurate, reducing errors in UI interactions.

- More autonomy, requiring no human intervention vs. Voyager’s one request for assistance.

| Metric | Voyager v1.2.0 | Claude Computer Use |

| Test Completed Successfully? | ✅ Yes | ✅ Yes |

| Human Assistance Required? | ❗ 1 time | ✅ 0 times |

| Efficiency Score (Higher = Better) | 🔴 0.45 | 🟢 0.52 |

| Successful Actions (%) | 🔴 87% | 🟢 98% |

| Data Entry Accuracy (%) | 🟢 98% | 🟢 98% |